In the last few days there has been a mini splurge in papers reviewing capture technologies. I thought it would be useful to write an overview of these. I have been involved in several comparison papers and so am aware of the limitations of comparison experiments. Many comparison publications fail to give readers the one answer they are looking for, a clear "which is better" statement. In fact the Sulonen paper discussed below says "the question often asked from a sequencing core laboratory thus is: “Which exome capture method should I use?”" They do appear to skirt the issue though in their final conclusions.

GenomeWeb has reviewed and interviewed

Michael Snyder’s NatureBiotechnology paper. They pointed out many of the highlights from the paper.

Exome-Seq via genome capture protocols either on arrays or in-solution have been making quite a splash with many hundreds or even thousands of samples being published. In-solution methods seem to have won out, which is not surprising given the throughput requirements of researchers. And Exome-Seq has become pretty common in many labs, allowing an interesting portion of the genome to be interrogated more cost effectively than whole genome sequencing (WGS) would allow. Even a $1000 genome might not supplant Exome-Seq as the number of samples that can be run is significantly higher and this is likely to give many projects the power to discover significant variants, albeit only in the targeted regions of course.

Exome-Seq kits vary significantly in the in the regions and amount of genome captured. Unfortunately the latest kits from each provider are not easily available on UCSC for comparison as annotation tracks. If you have a specific set of genes you are interested in you need to go out and find this information yourself. Both Agilent and Illumina require you to register on their websites to download tracks. Nimblegen's are available from their website.

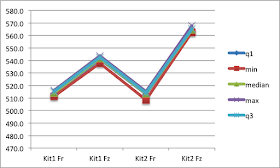

Table 1: Sulonen et al produce a comprehensive table comparing Agilent to Nimblegen and this has helped with some of the detail in my table below. I have chosen not to include details on what is captured as this is still changing quite rapidly. I have instead focussed on factors likely to impact projects such as input DNA requirements, pooling and time.

|

| Table 1 |

Parla et al:

A comparative analysis of exome capture. Genome Biol. 2011 Sep 29;12(9):R97.

Dick McCombie's lab at Cold Spring harbour compared two exome capture kits and found that both kits performed well. These focussed on CCDS capture and as such did not capture everything researchers may be interested in. Both were sequenced on the Illumina platform.

Asan et al:

Comprehensive comparison of three commercial human whole-exome capture platforms. Genome Biol. 2011 Sep 28;12(9):R95.

This group compared one array based kit with two in-solution versions; Nimblegen's array and in-solution kits and Agilent's SureSelect kit. They used the first Asian DNA sample published in Nature 2008. They also reported the differences in regions captured by these kits, this is mainly a design decision and there is no reason I am aware of that would not allow each company to target exactly the same regions. Asan et al found that Nimblegen produced better uniformity at 30-100 fold coverage. All platforms called SNPs equally well. They compared SNP calls from Illumina 1M chips to array based genotyping and reported >99% concordance between sequencing and arrays. They also discuss the advantages of in-solution methods over array based ones. HiSeq PE90bp, data submitted to SRA.

Sulonen et al:

Comparison of solution-based exome capture methods for next generation sequencing. Genome Biol. 2011 Sep 28;12(9):R94.

Sulonen et al used a single control DNA across two kits each from Agilent and Nimblegen. They found that Nimblegen generated lower amounts of off-target sequence and showed more specific targeting and enrichment for Nimblegen than Agilent. The Nimblegen kit was most efficient and captured the exome targeted with just 20 fold coverage. Agilent produced fewer duplicate reads. The 201 Genome Biology paper by Bainbridge et al discussed duplicate reads, their suggestion being that these come from low complexity libraries. They also stated that these can be difficult to screen out. We have been looking at library indexing approaches that could incorporate a random sequence in the index read. This would allow PCR duplicates to be removed quite easily. They again reported the negative impact of GC content on capture and said the long baits on the Agilent platform appeared to be slightly more impacted by this. Interestingly they reported that where a SNP was heterozygous more reference alleles were called than would have been expected and explained this as a result of the capture probes being designed to the reference allele. However the genotype concordance of sequencing to arrays, this time on Illumina 660W Quad chips, was >99% from a coverage of just 11 fold. The authors don't do a great job of saying what they did in the lab. They report sequencing reads of 60-100bp but in the sequencing methods don't say whether this is single or paired end nor what instrument or chemistry was used. They did submit their data to SRA though.

Clark et al:

Performance comparison of exome DNA sequencing technologies. Nat Biotechnol. 2011 Sep 25. doi: 10.1038/nbt.1975

Michael Snyder's lab at Stanford compared exome capture kits from Agilent, Illumina and Nimblegen using the same human sample. They found that Nimblegen covered the fewest regions but required the lowest amount of whilst Agilent and Illumina covered more regions but needed higher sequence coverage. Additionally Illumina captured non-coding regions and regulatory sequence not targeted by the other platforms, this is going to be a key development for some researchers. Lastly this group compared the exome data to whole genome sequencing of the same samples and interestingly found that Exome-Seq discovered additional small variants missed by WGS.

Some interesting stats from the sequencing data include: off target reads of one third for Illumina compared to 13% and 9% for Agilent and Nimblegen respectively. Illumina did respond to this in a

GenomeWeb article stating that their new TruSeq kits reduced duplication rates to generate far better results. Genomic loci high in GC were less well targeted and Agilent performed best in the regions where data could be compared. Illumina captured most SNPs but targeted the most sequence so no real surprise there. Where the three platforms overlapped Nimblegen was most efficient. HiSeq

Natsoulis, G. et al., 2011.

A Flexible Approach for Highly Multiplexed Candidate Gene Targeted Resequencing. PloS one, 6(6).

I wanted to include this PloS One paper as the group took quite a different approach, which may well be a very useful one for other groups. Instead of purchasing a whole exome capture kit Natsoulis et al designed 100bp olio’s ads baits to the Human exome and published these as an open resource. Now anyone can order the baits they are interested in and perform custom capture in their own lab.

How much does Exome-Seq cost?

Snyder’s paper included some comments on the costs of capture that they said was "highly negotiable". The biggest change coming is in the pooling strategies with all platforms moving to six or eight plex pooling before capture and Illumina's custom capture kits now supporting a 12 plex reaction. This makes the workflow much easier for large numbers of samples. I have been following this for a while and costs are dropping so rapidly as to make comparison or projection a bit of a waste of time. The number of sequencing lanes required is also changing as the density on Illumina continues to rise. Illumina handily provide a table estimating the number of exomes that can be run per lane on HiSeq and other platforms, HiSeq 600gb v3 chemistry allows 7 exomes per lane or 56 per flowcell at 50x coverage. And an exome might be achievable on a MiSeq next year. I have estimated out internal exome costs to be about £300-450 depending on coverage and read type, inclusive of library prep, capture and sequencing. We are only just starting to run these in my lab though so I'll soon find out how close my estimates really are.

Do you really need to target the exome?

A lot of people I have talked to are now looking at screening pipelines which use Exome-Seq ahead of WGS to reduce the number of whole Human genomes to be sequenced. The idea being that the exome run will find mutations that can be followed up in many cases and only those with no hits can be selected for WGS.

As a Cancer Genomics Core Facility I am also wondering how the smaller targeted panels like Illumina's demo TruSeq Custom Capture Kit: Cancer Panel will fit into this screening regime. These can be multiplexed to higher level, target many fewer regions but cost a lot less to sequence and analyse. Perhaps the start of the process should be this or even next-gen PCR based 'capture' from the likes of Fluidigm?

References:

1. Parla et al: A comparative analysis of exome capture.

Genome Biol. 2011 Sep 29;12(9):R97.

2. Asan et al: Comprehensive comparison of three commercial human whole-exome capture platforms.

Genome Biol. 2011 Sep 28;12(9):R95.

3. Sulonen et al: Comparison of solution-based exome capture methods for next generation sequencing.

Genome Biol. 2011 Sep 28;12(9):R94.

4. Clark et al: Performance comparison of exome DNA sequencing technologies.

Nat Biotechnol. 2011 Sep 25. doi: 10.1038/nbt.1975

5. Natsoulis, G. et al., 2011. A Flexible Approach for Highly Multiplexed Candidate Gene Targeted Resequencing. PloS one, 6(6).

6. Bainbridge, M.N. et al., 2010. Whole exome capture in solution with 3Gbp of data. Genome Biology.

7. Kingsmore, S.F. & Saunders, C.J., 2011. Deep Sequencing of Patient Genomes for Disease Diagnosis : When Will It Become Routine? ScienceTranslationalMedicine, 3(87), p.1-4. Review of Bainbridge et al and discussion of WGS and targeted or Exome-Seq. They also suggest that an exome costs 5-15 fold less that a WGS.

8. Maxmen, A., 2011. Exome Sequencing Deciphers Rare Diseases. Cell, 144, p.635-637. A review of the undiagnosed Diseases Program at

NIH. Exome-Seq and high-resolution microarrays for genotyping. They mention the team’s first reported discovery of a new disease, which was published in The New England Journal of Medicine.