FFPE makes DNA extraction difficult; DNA yields are generally low, quality can be affected by fixation artefacts and the number of amplifiable copies of DNA are reduced by strand-breaks and other DNA damage. Add on top of this almost no standardisation in the protocols used for fixation and widley different ages of samples and it's not suprising FFPE causes a headache for people that want to sequence genomes and exomes. In this post I'm going to look at alternative fixatives to formalin, QC methods for FFPE samples to assess their suitability in NGS methods, some recent papers and new methods to fix FFPE damage.

Why do we use formalin-fixation: The ideal material to work with for molecular studies is fresh-frozen (FFZN) tumour tissue, as nucleic acids are of high-quality. But many cancer samples are fixed in formalin for pathological analysis and stored as Formalin-Fixed Parrafin-Embeded (FFPE) blocks, preserving tissue morphology but damaging nucleic acids. The most common artifacts are, C>T base substitutions caused by deamination of cytosine bases converting them to uracil and generating thymines during PCR amplification, and strand-breaks. Both of these reduce the amount of correctly amplifiable template DNA in a sample and this must be considered when designing NGS experiments.

Molecular fixatives: Our Histopathology core recently published a paper in Methods: Tissue fixation and the effect of molecular fixatives on downstream staining procedures. In this they demonstrated that overall, molecular fixatives preserved tissue morphology of tissue as well as formaldehyde for most histological purposes. They presented a table, listing the molecular-friendly fixatives and reporting the average read-lengths achievable from DNA & RNA (median read-lengths 725 & 655 respectively). All the fixatives reviewed have been shown to preserve nucleic acid quality, by assessment of qPCR Ct values or through RNA analysis (RIN, rRNA ratio, etc). But no-one has performed a comparison of these at the genome level, and the costs of sequencing probably keep these kind of basic tests beyond the limits of most individual labs.

The paper also presents a tissue-microarray of differently fixed samples, which is a unique resource that allowed them to investigate the effects of molecular fixatives on histopathology. All methods preserved morphology, but there was a wide variation in the results from staining. This highlights the importance of performing rigourous comparisons, even for the most basic procedures in a paper (sorry to any histpathologists reading this, but I am writing from an NGS perspective).

The first paper describing molecular a fixative (UMFIX) appeared back in 2003, in it the authors describe the comparison of FFZN to UMFIX tissue for DNA and RNA extraction, with no significant differences between UMFIX and FFZN tissues on PCR, RT-PCR, qPCR, or expression microarrays. Figure B from their paper shows how similar RNA bioanalyser profiles were from UMFIX and FFZN.

|

| UMFIX (top) and FFZN (bottom) |

Recent FFPE papers: A very recent and really well written paper in May 2014 by Hedegaard et al compared FFPE and FFZN tissues to evaluate their use

in exome and RNA-seq. They used two extraction methods for DNA and three for RNA with

different effects on quality and quantity. Only 30% of exome libraries

worked, but with 70% concordance (FFZN:FFPE). They made RNA-seq libraries

from 20 year old samples with 90% concordance, and found a set of 1500

genes that appear to be due to fixation. Their results certainly make

NGS analysis of FFPE samples seem to be much more possible than previous

work. Interestingly they made almost no changes to the TruSeq exome protocol, so some fiddling with library prep, perhaps adding more DNA to reduce the impact of strand-breaks for instance would help a lot (or fixing FFPE damage - see below). The RNA-seq libraries were made using RiboZero and ScriptSeq. Figure 2 from their paper shows the exome variants with percentages of common (grey), FFZN-only (white) and FFP-only (red), there are clear sample issues due to age (11, 7, 3 & 2 years storage) but the overall results were good.

Other recent papers looking at FFPE include: Ma et al (Apr 2014): they developed a bioinformatics method fo gene fusion detection in FFPE RNA-seq. Li et al (Jan 2014): they investigated the effect of molecular fixatives on routine histpathology and molecular analysis. They achieved high-quality array results with as little as 50ng of RNA. Norton et al (Nov 2012): they manually degraded RNA in 9 pairs of matched FFZN/FFPE samples, and ran both Nanostring and RNA-seq. Both gave reliable gene expression results from degraded material. Sinicropi et al (Jul 2012): they developed and optimised RNA-seq library prep and informatics protocols. And most recently Cabanski et al published what looks like the first RNA-access paper (not open access and unavailable to me). RNA-access is Illumina's new kit for FFPE that combines RNA-seq prep from any RNA (degraded or not) with exome capture (we're about to test this, once we get samples).

QC of FFPE samples: It is relatively simple to extract nucleic acids from FFPE tissue and get quantification values to see how much DNA or RNA there is, but tolerating a high failure rate, due to low-quality, in subsequent library prep is likely to be too much of a headache for most labs. Fortunately several groups have been developing QC methods for FFPE nucleic acids. Here I'll focus mostly on those for DNA.

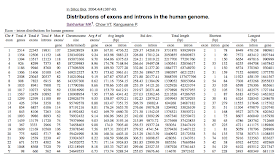

Van beers et al published an excellent paper in 2006 on a multiplex PCR QC for FFPE DNA. This was developed for CGH arrays and produces 100, 200, 300 and 400bp fragments from nonoverlapping target sites in the GAPDH gene from the template FFPE DNA. Figure 2 from their paper (reproduced below) demonstrate a good (top) and a bad (bottom) FFPE samples results.

Whilst the above method is very robust and generally predictive of how well an FFPE sample will work in downstream molecular applications, it is not high-throughput. Other methods generally use qPCR as the analytical method as it is quick and can be run in very high-throughput. Illumina sell an FFPE QC kit which uses comparison of a control template to test sampeples and a deltaCq method to determine if samples are suitable for arraya or NGS. LifeTech also sell a similar kit but for RNA, Arcturus sample QC, using two β-actin probes and assessing quality via their 3'/5' ratio.Perhaps the ideal approach would be a set of exonic probes multiplexed as 2, 3, or 4-colour TaqMan assays. This could be used on DNA and RNA and would bring the benefits of the Van beer and LifeTech methods to all sample types.

Fixing FFPE damage: Another option is to fix the damage caused by fomalin fixation. This is attractive as there are literally millions of FFPE blocks, and many have long-term follow up data. A paper in Oncotarget in 2012 reported the impact of using uracil-DNA glycosylase (UDG) to reduce C>T caused by cytosine deamination to uracil. They also showed that this can be incoporated into current methods as a step prior to PCR, something which we've been doing for qPCR for many years. There are not strong reasons to incorporate this as a step in any NGS workflow as there is little impact on high-quality templates.

NEB offer a cocktail of ezymes in their PreCR kit, which repairs damaged DNA templates. It is designed to work on: modified bases, nicks and gaps, and blocked 3' ends. They had a poster at AGBT demonstrating the utility of the method, showing increased library yields and success rates with no increase in bias in seqeuncing data.

Illumina also have an FFPE restoration kit; restoration is achieved through treatment with DNA polymerase, DNA repair enzyme, ligase, and

modified Infinium WGA reaction, see here for more details.

These cocktails can almost certainly be added to: MUTYH works to fix 8-oxo-G damage, CEL1 is used in TILLING analysis to create strand-breaks in mismatched templates and could be included, lots of other DNA repair enzymes could be added to a mix to remove nearly all compromised bases. It may be possible to go a step further and fix compromised bases rather than just neutralise their effect.

Whatever the case it looks very much like FFPE samples are going to be processed in a more routine manner very soon.