We recently bought two HiSeq 4000 instruments to run larger RNA-seq and exome projects on. Over the next few months we'll be transitioning other library types where we can, and think perhaps 65-85% of the work we do can be ported over from the 2500. Look on eBay and you can find multiple HiSeq instruments for sale, and I've been contacted by increasing numbers of people wanting to sell 2500's - but buying a 2nd hand machine is not as simple as it looks.

Some comments and analysis from the exciting and fast moving world of Genomics. This blog focuses on next-generation sequencing and microarray technologies, although it is likely to go off on tangents from time-to-time

Pages

▼

Thursday, 17 March 2016

Wednesday, 16 March 2016

How many single cells are needed in a single-cell sequencing experiment

The landscape for gene expression analysis (and many other analyses) is moving away from measurements made in bulk tissues to single-cell methods. Bulk measurements are an average of the cells in the sample and so cannot truly reveal the subtleties of the biology in the sample, to get closer to the truth we do need to adopt single-cell methods, and this means making a choice as to which system you might run in your lab. Of course bulk measurements are still very powerful in understanding biology and we should not stop using the methods we've worked with for decades - but we should be thinking carefully about whether the specific question being asked could be better answered with, or only answered with single cell methods.

I started writing this post because I'm getting my head around the different methods for single-cell analysis. I'm trying to keep my focus on two areas right now, single-cell mRNA-seq analysis and copy number variation. For both a question that comes up all the time is "How many single cells are needed in my experiment?" and right now that is not a question I feel I can give a robust answer to!

In their Nature Reviews Genetics review article Shapiro, Biezuner and Linnarsson use some relatively simple back-of-the-envelope calculations that lead them to conclude "hundreds or thousands of single cells will need to be analysed to answer targeted questions in single tissues". The current systems on offer allow capture and sequencing of cells in this range so in theory any will be usable for experiments, I've briefly summarised some of the main contenders today including DROP-seq, Fluidigm, Wafergen and 10X Genomics.

The next few years are likely to see the continued development of single-cell systems. Which platform labs should invest in is going to be difficult to answer and this feels very much like the early days of NGS when we were choosing between Illumina and SOLiD; expensive instruments, rapidly developing technologies and an uncertainty about which will come out top-dog.

I'll be adding to this table over the next few months as I look into other systems, feel free to suggest other technologies to add and do point out inaccuracies where you see them.

Thursday, 10 March 2016

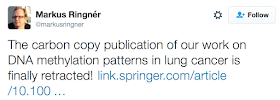

Paper retracted after @Exome-seq alert on Twitter

I thought I'd post a brief update on a story from last year where I was alerted by @MattiasAine via one of my TwitterBots about a group ripping off his Karlsson 2014 paper. The evidence was pretty damning - almost identical data, analysis, and figures simply rotated in the Zhao 2015 version. I alerted the editors of Tumor Biology to the problems with the 2015 paper and it has now been retracted and taken down from the site.

An opportunity for you in the Genomics Core facility?

I've just posted an advert for a Principal Scientific Associate to join the Genomics Core as the Deputy Manager. This is going to be a key position in the team as we're building building our single-cell genomics capabilities. We're looking for someone with a PhD in a relevant subject or with equivalent experience, you'll have extensive hands on laboratory experience in molecular biology and NGS. And ideally you'll have experience with single-cell genomics technologies. This is a senior post in our team and you'll have the opportunity to make a significant contribution to the science in our institute.

The Genomics Core at the Cancer Research UK Cambridge Institute, a department of the University of Cambridge, is one of several core facilities in one of Europe's top cancer research institutes. We are situated on the Addenbrooke's Biomedical Campus, and are part of both the University of Cambridge School of Clinical Medicine, and the Cambridge Cancer Centre. The Institute focus is high-quality basic and translational cancer research and we have several research groups with an excellent track record in cancer genomics 1, 2, 3. The majority of data generated by the Genomics Core facility is Next Generation Sequencing, and we support researchers at the Cambridge Institute, as well as nine other University Institutes and Departments within our NGS collaboration.

You can get more information about the lab on our website. You can get more information about the role, and apply on the University of Cambridge website.

You can get more information about the lab on our website. You can get more information about the role, and apply on the University of Cambridge website.

Monday, 7 March 2016

A personal journey in Genomics

Eric Minikel is lead author on a paper that came out in STM in January: Quantifying prion disease penetrance using large population control cohorts. The story is an incredibly personal one involving his wife Sonia, which Eric documents at the cureFFI.org blog. The paper reports on the penetrance of variants in the prion protein gene and the risk of actually getting prion disease. They analysed 16,000 case exomes and 60,706 population controls, and verified their findings in data provided by 23andMe (read their coverage as well) and found that missense variants in PRNP are 30x more common in the population than expected. Unfortunately Erik's wife Sonia Vallabh carries one of four of these variants that virtually guarantee the disease will develop, the same one that led to her mothers death from fatal familial insomnia, hence cureFFI.org.

|

| Fig 1 from the STM paper: PRNP variant freq. is 30x higher in controls |

Eric and Sonia were not scientists when they first heard about FFI or PRNP. But they retrained and are now both at the Broad Institute in Boston, Erik is in Daniel MacArthur's lab, and Sonia is in the lab of Stuart Schreiber. The STM paper is based on an analysis of the ExAC dataset.

The results reveal the penetrance of the many variants in PRNP and change the view many doctors may have had, that any variants were associated with a 100 percent risk for developing the

disease. Erik et al identified benign missense variants and showed others spanned a spectrum of penetrance from 0.1 to ~100%. They provide quantitative estimates of lifetime risk and importantly in the paper they discuss the problems of assessing penetrance and risk. Even the ExAC dataset is only "approaching the size and

quality required for such analyses" which limits the study, and the sequencing data generated today are imperfect with many genomic loci presenting challenges for sequencing technologies and variant calling.

Sonia's working in Stuart Schreiber's lab trying to find treatments for human prion diseases. The STM discussion suggests that reducing Prion Protein expression may be an option. Within the ExAC data they found heterozygous loss-of-function variants in three

healthy people showing no effect from a 50% reduction in gene dosage for PRNP. Reducing PRNP dosage in patients may be tolerated. Whether they can develop small molecules or other methods to achieve this will be the focus of a lot more research.

The work would have been impossible without the groundwork laid out by the ExAc team, see their preprint on the BioRxiv: Analysis of protein-coding genetic variation in 60,706 humans.